Drupal Data Fetcher

Drupal Data Fetcher is a Python toolkit for downloading, synchronizing, and managing open data from the Drupal.org API, with support for local and Google Cloud Storage. It is designed for researchers, data engineers, and anyone interested in large-scale Drupal.org data analysis.

Features

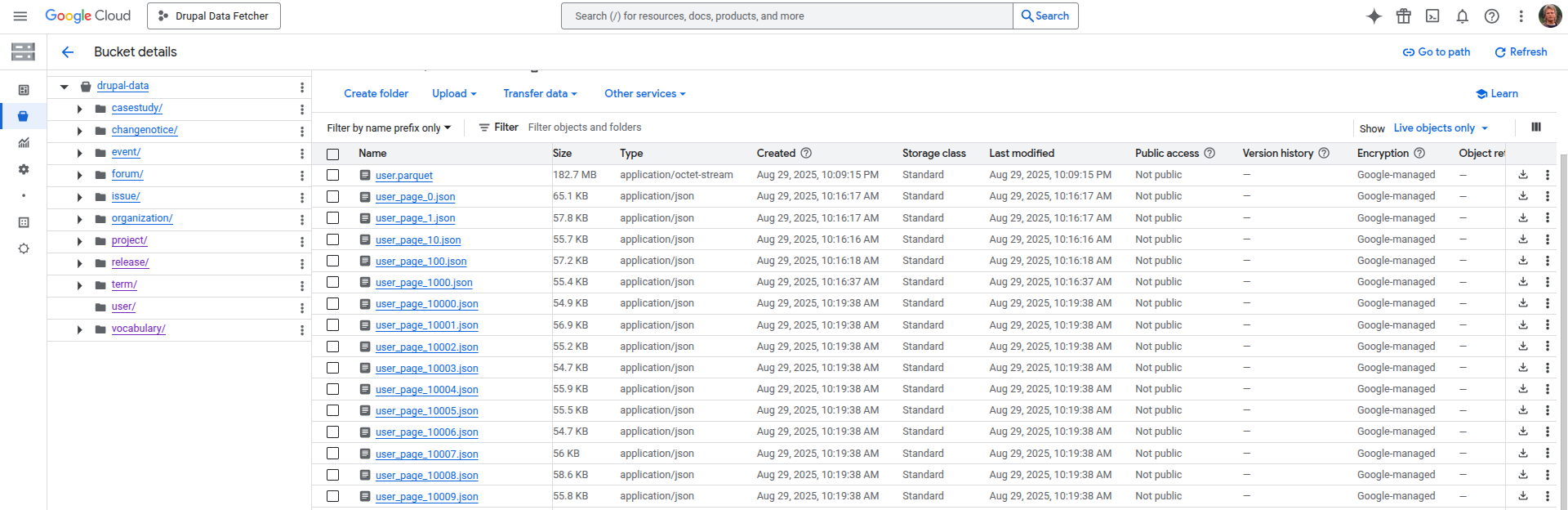

- Fetches all major Drupal.org datasets (projects, issues, releases, users, etc.)

- Handles API rate limits and retries automatically

- Parallel upload/download to Google Cloud Storage

- CLI for easy automation and scripting

- Extensible and well-documented Python classes

Quickstart

1. Local setup

- Start the machine:

# Install dependencies

make install

# Start virtual env

source .venv/bin/activate

2. Cloud setup

- Edit

.envfile with credentials:

# Basic project info.

PROJECT_ID=your-gcp-project-id # e.g. drucom

PROJECT_NAME='Drupal Data Fetcher'

# User/Bot in charge of publishing data to cloud.

SERVICE_ACCOUNT=drupal-data-fetcher

GOOGLE_APPLICATION_CREDENTIALS=/path/to/keys/drupal-data-fetcher.json

# Cloud Storage bucket.

# e.g. choose-a-globally-unique-name

BUCKET_ID=your-gcs-bucket-name

BUCKET_REGION=europe-west-4 # us-central1

# Cloud BigQuery dataset.

BIGQUERY_DATASET_ID=drupal

# (optional) Billing account - set spending limits on it for safety.

# Find yours: `gcloud billing accounts list`

BILLING_ACCOUNT_ID=000000-111111-000001

- Run the setup script:

# 1. Make sure your .env file is configured

# 2. Load environment variables

source .env # or direnv reload

# 3. Run the setup script

./script/setup-gcp.sh

# NB: You can revert everything with this other script afterward:

# ./script/cleanup-gcp.sh

3. Extract data

First, synchronize data files from GCP as much as possible:

make cli sync project

make cli sync-all

Then fetch missing pages from Drupal.org:

make cli extract project

make cli extract-all

Next, deploy existing data to BigQuery to create tables:

make cli deploy-all

Finally, sync everything again to push newly fetched data to GCP:

make cli sync-all

4. Incremental updates

Now you can use the incremental update command to keep your data current:

make data

This command fetches only the latest changes from Drupal.org and updates your BigQuery tables efficiently.

Documentation

- CLI Reference - All available commands and usage

- Data Fetcher - API client for Drupal.org

- Data Extractor - Data collection and sync

- Data Transformer - JSON to Parquet conversion

- Data Loader - Cloud storage and BigQuery

- Data Updater - Incremental updates

Supported Resources

| Resource | Description |

|---|---|

project |

Drupal modules, themes, distributions |

issue |

Bug reports, feature requests |

release |

Software releases and versions |

user |

User profiles and activity |

forum |

Forum posts and discussions |

organization |

Companies and groups |

changenotice |

API change notifications |

casestudy |

Case studies and examples |

event |

Events and meetups |

term |

Taxonomy terms |

vocabulary |

Taxonomy vocabularies |

Testing

make test

License

MIT License. See LICENSE file.